But the reasons why it can be hard to combine machines and humans are also central to why they work so well together, says Baeck. AI can operate at speeds and scales far out of our reach, but machines are still a long way from replicating human flexibility, curiosity and grasp of context.

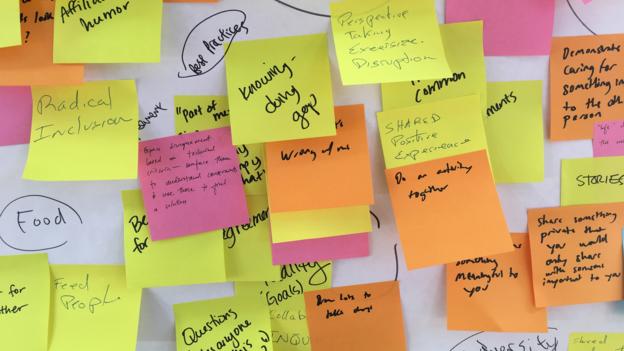

A recent report Baeck co-authored with Nesta senior researcher Aleks Berditchevskaia identified a number of ways AI could enhance our collective intelligence. This includes helping make better sense of data, finding better ways to coordinate decision making, helping us overcome our inherent biases and highlighting unusual solutions that are often overlooked.

But the report also showed that combining AI tools with human teams requires careful design to avoid unintended consequences. And there is currently a dearth of research into how groups react to being corralled by AI, says Berditchevskaia, making it hard to predict how effective these systems will be in the wild.

“It can potentially stretch us in new ways or enhance our speed when we need to be reacting quicker,” she adds. “But we’re still at very early stages of understanding and being able to navigate the individual reactions to those kinds of AI system in terms of issues of trust and how it affects their own sense of agency.”

Humanising AI

Our combined wisdom can also help give a more human element to AI technology, and better guide its decisions.

At London-based start-up Factmata, which has built an AI moderation system, the company has enlisted more than 2,000 experts, including journalists and researchers, to analyse online content for things including bias, credibility or hate speech. They then used this analysis to train a natural-language processing system to automatically scan web pages for problematic content.

“Once you have that trained algorithm it can scale to those millions of pieces of content across the internet,” says CEO Dhruv Ghulati. “You’re able to scale up the critical assessment of those experts.”

While AI is often trained on data labelled by experts in a one-off process, Factmata’s experts continually refresh the training data to make sure the algorithms can keep up with the ever-shifting political and media landscape. They also let members of the public give feedback on the AI’s output, which Ghulati says ensures it remains relevant and free of bias itself.

Entwining ourselves and our decisions every more tightly with AI is not without risks, however. The synergy between AI and collective intelligence works best the more information we give the machine, says Woolley, which involves difficult choices about how much privacy we’re happy to surrender.